2.4.2 Uploading File Using Impulse UI

Impulse provides a convenient way to create a table and upload data to it. Data uploaded to impulse is partitioned and indexed for efficient query. This section describes how to upload data into a table using Impulse’s file upload mechanism.

Step 1: Upload Data

- From the main navigation menu, click “Load Data”

- Drag and drop as many files as you want to upload to a table. You may browse and upload files as well. See Figure 2.4.2a below.

- Fill out the form (See Figure 2.4.2b below):

- Warehouse: from the drop down, select the data warehouse in which you wish to create the table.

- Datasource: Give a meaningful name to your datasource. The datasource is analogous to a table in RDBMS paradigm. If the table name within the selected warehouse exists, the data will be uploaded in the existing table, else a new table will be created.

- Input Source: Since we are uploading, leave the default selection as “Browse & Upload”. For other types of input source, see the appropriate sections of this document.

- File Format: Select the appropriate file format of the data file you are uploading. The supported file formats are:

- Parquet

- CSV or comma separated values

- TSV or tab separated values

- PSV or pipe separated values

- JSON (line delimited) meaning each line in the file represents a single row

- Input Header: This field is optional. If your input file is delimited (csv, psv, tsv) and does not contain the field header as the first line in each uploaded file, provide a comma separated list of header. Leave this field empty if your input file contains the header, otherwise, the ingestion engine will try to ingest the first line as data and not as header.

- Click Next button to configure the indexing and partitioning of data for efficient query execution.

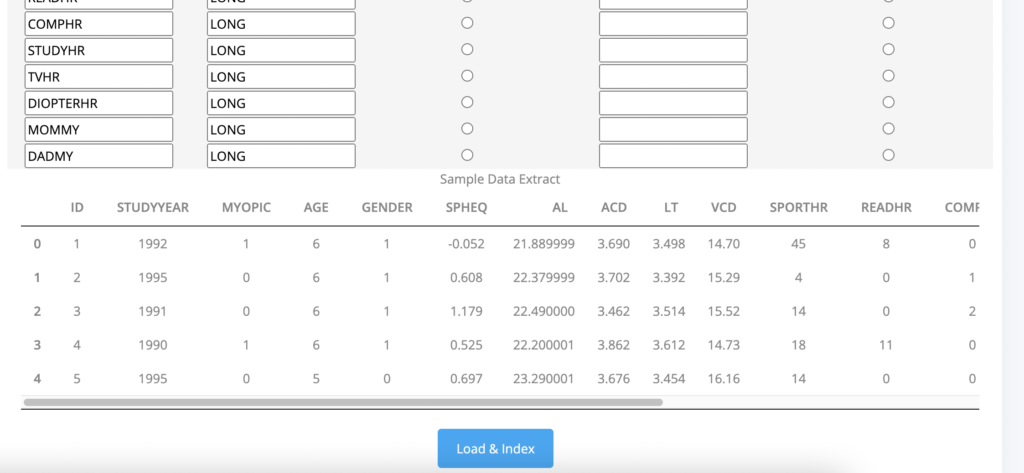

Step 2: Column Mapping and Partition Parameters

After clicking the “Next” button in step 1, the next page will shows the parameters for the step 2 (see Figure 2.4.2c for example). These parameters control how the data indexing and partitions will be created. The description of each field within this step is as follows:

**For the best result, use a date or time based column as the primary partition column. If none of the column can be parsed as a date/time, do not use any partition.**

- Datasource: the table name (as set in step 1 above)

- Secondary Partition Strategy: This defines the column or columns that will be used to create the secondary partition. Impulse supports two types of secondary partition strategies:

- Dynamic: This is the best partition strategy and does the most efficient partitioning based on the data. In most cases, you will leave this as the default secondary partition strategy.

- Single Column: If your data will have only one column in the group by or where clause, this single column based strategy will likely to work the best. However, this is highly discouraged to use a single column based partitioning.

- Primary Partition Granularity: If you have a date/time based primary column, this parameter specifies how your data will be split into partitions. For example, if you select a “day” for the granularity level, the entire data will be grouped by day and split into partitions.

- Missing Datetime Placeholder: If you select a date/time based column as the primary partition column and if any of the rows contains invalid/missing/null values for the primary partition column, it will fill the missing value with this placeholder datetime.

- Max Parallel Upload Tasks: This parameter defines how many threads the system will create to upload the data in parallel. For a single node deployment, this should be set at maximum of 60% of number of available CPU cores in your server. For example, if you have 32-core CPU, set the max parallel tasks as 20 or less. For a distributed cluster nodes, this value should be 60% of the sum of cores of all worker nodes.

- Upload Mode: Specify whether you want to append rows to and existing table or overwrite existing partition.

Field Mapping: System will try to guess the datatypes of each column. In case of incorrect interpretation, you should edit the datatypes of every column that were incorrectly interpreted. Only the “STRING” “LONG” and “DOUBLE” datatypes are supported. Dates are represented as a STRING datatype.

From the field mapping section, select the primary partition column, preferably a datetime column.

you must specify the datetime format of the primary partition column. ISO date format and joda-time datetime ( https://www.joda.org/joda-time/key_format.html ) format are supported.

If your secondary partition strategy is “Single Column” based, you must select the secondary partition column.

At the bottom of the page, the system displays a few lines of actual data to help you to see the datatype, format and sample values of the actual dataset.

To start indexing, click the “Load and Index” button.

This will open the “Tasks” page that shows a list of all active or completed indexing tasks. See Figure 2.4.2d as an example.